Links

https://lore.kernel.org/all/20230612093537.614161713@infradead.org/T/

Intro

As I was catching up with the scheduler's “change pattern” sched_change patches, I figured it was time I got up to speed with the guard zoology.

The first part of this post is a code exploration with some of my own musings, the second part is a TL;DR with what each helper does and when you should use

them (*)

(*) according to my own understanding, provided as-is without warranties of any kind, batteries not included.

It's __cleanup__ all the way down

The docstring for __cleanup kindly points us to the relevant gcc/clang documentation. Given I don't really speak clang, here's the relevant GCC bit:

https://gcc.gnu.org/onlinedocs/gcc/Common-Variable-Attributes.html#index-cleanup-variable-attribute

cleanup (cleanup_function)

The cleanup attribute runs a function when the variable goes out of

scope. This attribute can only be applied to auto function scope variables;

it may not be applied to parameters or variables with static storage

duration. The function must take one parameter, a pointer to a type

compatible with the variable. The return value of the function (if any) is

ignored.

When multiple variables in the same scope have cleanup attributes, at exit

from the scope their associated cleanup functions are run in reverse order

of definition (last defined, first cleanup).

So we get to write a function that takes a pointer to the variable and does cleanup for it whenever the variable goes out of scope. Neat.

DEFINE_FREE

That's the first one we'll meet in include/linux/cleanup.h and the most straightforward.

* DEFINE_FREE(name, type, free):

* simple helper macro that defines the required wrapper for a __free()

* based cleanup function. @free is an expression using '_T' to access the

* variable. @free should typically include a NULL test before calling a

* function, see the example below.

Long story short, that's a __cleanup variable definition with some extra sprinkles on top:

#define __cleanup(func) __attribute__((__cleanup__(func)))

#define __free(_name) __cleanup(__free_##_name)

#define DEFINE_FREE(_name, _type, _free) \

static __always_inline void __free_##_name(void *p) { _type _T = *(_type *)p; _free; }

So we can e.g. define a kfree() cleanup type and stick that onto any kmalloc()'d variable to get automagic cleanup without any goto's. Some languages call that a smart pointer.

DEFINE_FREE(kfree, void *, if (_T) kfree(_T))

void *alloc_obj(...)

{

struct obj *p __free(kfree) = kmalloc(...);

if (!p)

return NULL;

if (!init_obj(p))

return NULL;

return_ptr(p); // This does a pointer shuffle to prevent the kfree() from happening

}

I won't get into the return_ptr() faff, but if you have a look at it and wonder what's going on, it's mostly going to be because of having to do the shuffle with no double evaluation. This is relevant: https://lore.kernel.org/lkml/CAHk-=wiOXePAqytCk6JuiP6MeePL6ksDYptE54hmztiGLYihjA@mail.gmail.com/

DEFINE_CLASS

This one is pretty much going to be DEFINE_FREE() but with an added quality of life feature in the form of a constructor:

* DEFINE_CLASS(name, type, exit, init, init_args...):

* helper to define the destructor and constructor for a type.

* @exit is an expression using '_T' -- similar to FREE above.

* @init is an expression in @init_args resulting in @type

#define DEFINE_CLASS(_name, _type, _exit, _init, _init_args...) \

typedef _type class_##_name##_t; \

static __always_inline void class_##_name##_destructor(_type *p) \

{ _type _T = *p; _exit; } \

static __always_inline _type class_##_name##_constructor(_init_args) \

{ _type t = _init; return t; }

#define CLASS(_name, var) \

class_##_name##_t var __cleanup(class_##_name##_destructor) = \

class_##_name##_constructor

You'll note that yes, it can be expressed purely as a DEFINE_FREE(), but it saves us from a bit of repetition, and will enable us to craft stuff involving locks later on:

DEFINE_CLASS(fdget, struct fd, fdput(_T), fdget(fd), int fd)

void foo(void)

{

fd = ...;

CLASS(fdget, f)(fd);

if (fd_empty(f))

return -EBADF;

// use 'f' without concern

}

DEFINE_FREE(fdput, struct fd *, if (_T) fdput(_T))

void foo(void)

{

fd = ...;

struct fd *f __free(fdput) = fdget(fd);

if (fd_empty(f))

return -EBADF;

// use 'f' without concern

Futex_hash_bucket case

For a more complete example:

DEFINE_CLASS(hb, struct futex_hash_bucket *,

if (_T) futex_hash_put(_T),

futex_hash(key), union futex_key *key);

int futex_wake(u32 __user *uaddr, unsigned int flags, int nr_wake, u32 bitset)

{

union futex_key key = FUTEX_KEY_INIT;

DEFINE_WAKE_Q(wake_q);

int ret;

ret = get_futex_key(uaddr, flags, &key, FUTEX_READ);

if (unlikely(ret != 0))

return ret;

CLASS(hb, hb)(&key);

/* Make sure we really have tasks to wakeup */

if (!futex_hb_waiters_pending(hb))

return ret;

spin_lock(&hb->lock);

/* ... */

}

Using gcc -E to stop compilation after the preprocessor has expanded all of our fancy macros (*), the resulting code is fairly readable modulo the typedef:

typedef struct futex_hash_bucket * class_hb_t;

static inline __attribute__((__gnu_inline__)) __attribute__((__unused__))

__attribute__((no_instrument_function)) __attribute__((__always_inline__))

void

class_hb_destructor(struct futex_hash_bucket * *p)

{

struct futex_hash_bucket * _T = *p;

if (_T)

futex_hash_put(_T);

}

static inline __attribute__((__gnu_inline__)) __attribute__((__unused__))

__attribute__((no_instrument_function)) __attribute__((__always_inline__))

struct futex_hash_bucket *

class_hb_constructor(union futex_key *key)

{

struct futex_hash_bucket * t = futex_hash(key);

return t;

}

int futex_wake(u32 *uaddr, unsigned int flags, int nr_wake, u32 bitset)

{

struct futex_q *this, *next;

union futex_key key = (union futex_key) { .both = { .ptr = 0ULL } };

struct wake_q_head wake_q = { ((struct wake_q_node *) 0x01), &wake_q.first };

int ret;

if (!bitset)

return -22;

ret = get_futex_key(uaddr, flags, &key, FUTEX_READ);

if (__builtin_expect(!!(ret != 0), 0))

return ret;

if ((flags & 0x0100) && !nr_wake)

return 0;

class_hb_t hb __attribute__((__cleanup__(class_hb_destructor))) = class_hb_constructor(&key);

if (!futex_hb_waiters_pending(hb))

return ret;

spin_lock(&hb->lock);

/* ... */

}

(*) I use make V=1 on the file I want to expand, copy the big command producing the .o, ditch the -Wp,-MMD,**.o.d part and add a -E to it.

DEFINE_GUARD

For now, ignore the CONDITIONAL and LOCK_PTR stuff, this is only relevant to the scoped & conditional guards which we'll get to later.

#define DEFINE_CLASS_IS_GUARD(_name) \

__DEFINE_CLASS_IS_CONDITIONAL(_name, false); \

__DEFINE_GUARD_LOCK_PTR(_name, _T)

#define DEFINE_GUARD(_name, _type, _lock, _unlock) \

DEFINE_CLASS(_name, _type, if (!__GUARD_IS_ERR(_T)) { _unlock; }, ({ _lock; _T; }), _type _T); \

DEFINE_CLASS_IS_GUARD(_name)

#define guard(_name) \

CLASS(_name, __UNIQUE_ID(guard))

So it's a CLASS with a constructor and destructor, but the added bonus is the automagic __cleanup variable definition.

Why is that relevant? Well, consider locks. You don't declare a variable for a lock acquisition & release, you manipulate an already-allocated object (e.g. a mutex). However, no variable declaration means no __cleanup. So this just declares a variable to slap __cleanup onto it and have an automagic out-of-scope cleanup callback.

Let's have a look at an example in the thermal subsystem with a mutex critical section:

DEFINE_GUARD(cooling_dev, struct thermal_cooling_device *, mutex_lock(&_T->lock),

mutex_unlock(&_T->lock))

static ssize_t

cur_state_store(struct device *dev, struct device_attribute *attr,

const char *buf, size_t count)

{

struct thermal_cooling_device *cdev = to_cooling_device(dev);

unsigned long state;

int result;

/* ... */

if (state > cdev->max_state)

return -EINVAL;

guard(cooling_dev)(cdev);

result = cdev->ops->set_cur_state(cdev, state);

if (result)

return result;

The preprocessor output looks like so:

typedef struct thermal_cooling_device * class_cooling_dev_t;

static inline __attribute__((__gnu_inline__)) __attribute__((__unused__))

__attribute__((no_instrument_function)) __attribute__((__always_inline__)) void

class_cooling_dev_destructor(struct thermal_cooling_device * *p)

{

struct thermal_cooling_device * _T = *p;

if (!({

unsigned long _rc = (unsigned long)(_T);

__builtin_expect(!!((_rc - 1) >= -4095 - 1), 0);

})) {

mutex_unlock(&_T->lock);

};

}

static inline __attribute__((__gnu_inline__))

__attribute__((__unused__)) __attribute__((no_instrument_function))

__attribute__((__always_inline__)) struct thermal_cooling_device *

class_cooling_dev_constructor(struct thermal_cooling_device * _T)

{

struct thermal_cooling_device * t =

({ mutex_lock(&_T->lock); _T; });

return t;

}

static __attribute__((__unused__)) const bool class_cooling_dev_is_conditional = false;

static inline __attribute__((__gnu_inline__)) __attribute__((__unused__))

__attribute__((no_instrument_function)) __attribute__((__always_inline__)) void *

class_cooling_dev_lock_ptr(class_cooling_dev_t *_T)

{

void *_ptr = (void *)(unsigned long)*(_T);

if (IS_ERR(_ptr)) {

_ptr = ((void *)0);

}

return _ptr;

}

static inline __attribute__((__gnu_inline__)) __attribute__((__unused__))

__attribute__((no_instrument_function)) __attribute__((__always_inline__)) int

class_cooling_dev_lock_err(class_cooling_dev_t *_T)

{

long _rc = (unsigned long)*(_T);

if (!_rc) {

_rc = -16;

}

if (!__builtin_expect(!!((unsigned long)(void *)(_rc) >= (unsigned long)-4095), 0)) {

_rc = 0;

}

return _rc;

}

static ssize_t

cur_state_store(struct device *dev, struct device_attribute *attr,

const char *buf, size_t count)

{

struct thermal_cooling_device *cdev = ({ void *__mptr = (void *)(dev); _Static_assert(__builtin_types_compatible_p(typeof(*(dev)), typeof(((struct thermal_cooling_device *)0)->device)) || __builtin_types_compatible_p(typeof(*(dev)), typeof(void)), "pointer type mismatch in container_of()"); ((struct thermal_cooling_device *)(__mptr - __builtin_offsetof(struct thermal_cooling_device, device))); });

unsigned long state;

int result;

if (sscanf(buf, "%ld\n", &state) != 1)

return -22;

if ((long)state < 0)

return -22;

if (state > cdev->max_state)

return -22;

class_cooling_dev_t __UNIQUE_ID_guard_435 __attribute__((__cleanup__(class_cooling_dev_destructor))) = class_cooling_dev_constructor(cdev);

result = cdev->ops->set_cur_state(cdev, state);

if (result)

return result;

thermal_cooling_device_stats_update(cdev, state);

return count;

}

DEFINE_LOCK_GUARD

Okay, we have sort-of-smart pointers, classes, guards for locks, what's next? Well, certain locks need more than just a pointer for the lock & unlock operations. For instance, the scheduler's runqueue locks need both a struct rq pointer and a struct rq_flags pointer.

So LOCK_GUARD's are going to be enhanced GUARD's manipulating a composite type instead of a single pointer:

#define __DEFINE_UNLOCK_GUARD(_name, _type, _unlock, ...) \

typedef struct { \

_type *lock; \

__VA_ARGS__; \

} class_##_name##_t; \

Note that there is also the “no pointer” special case, which is when there is no accessible type for the manipulated lock – think preempt_disable(), migrate_disable(), rcu_read_lock(); Just like for GUARD, we still declare a variable to slap __cleanup onto it.

Let's look at the RCU case:

DEFINE_LOCK_GUARD_0(rcu,

do {

rcu_read_lock();

/*

* sparse doesn't call the cleanup function,

* so just release immediately and don't track

* the context. We don't need to anyway, since

* the whole point of the guard is to not need

* the explicit unlock.

*/

__release(RCU);

} while (0),

rcu_read_unlock())

void wake_up_if_idle(int cpu)

{

struct rq *rq = cpu_rq(cpu);

guard(rcu)();

if (is_idle_task(rcu_dereference(rq->curr))) {

// ....

}

}

static __attribute__((__unused__)) const bool class_rcu_is_conditional = false;

typedef struct {

void *lock;

} class_rcu_t;

static inline __attribute__((__gnu_inline__)) __attribute__((__unused__))

__attribute__((no_instrument_function)) __attribute__((__always_inline__))

void

class_rcu_destructor(class_rcu_t *_T)

{

if (!({

unsigned long _rc = ( unsigned long)(_T->lock);

__builtin_expect(!!((_rc - 1) >= -4095 - 1), 0);

})) {

rcu_read_unlock();

}

}

static inline __attribute__((__gnu_inline__)) __attribute__((__unused__))

__attribute__((no_instrument_function)) __attribute__((__always_inline__))

void *

class_rcu_lock_ptr(class_rcu_t *_T)

{

void *_ptr = (void *)( unsigned long)*(&_T->lock);

if (IS_ERR(_ptr)) {

_ptr = ((void *)0);

}

return _ptr;

}

static inline __attribute__((__gnu_inline__)) __attribute__((__unused__))

__attribute__((no_instrument_function)) __attribute__((__always_inline__))

int

class_rcu_lock_err(class_rcu_t *_T)

{

long _rc = ( unsigned long)*(&_T->lock);

if (!_rc) {

_rc = -16;

}

if (!__builtin_expect(!!((unsigned long)(void *)(_rc) >= (unsigned long)-4095), 0)) {

_rc = 0;

}

return _rc;

}

static inline __attribute__((__gnu_inline__)) __attribute__((__unused__))

__attribute__((no_instrument_function)) __attribute__((__always_inline__))

class_rcu_t

class_rcu_constructor(void)

{

class_rcu_t _t = { .lock = (void*)1 }, *_T __attribute__((__unused__)) = &_t;

do {

rcu_read_lock();

(void)0; // __release(RCU); just for sparse, see comment in definition

} while (0);

return _t;

}

void wake_up_if_idle(int cpu)

{

struct rq *rq = (&(*({ do { const void __seg_gs *__vpp_verify = (typeof((&(runqueues)) + 0))((void *)0); (void)__vpp_verify; } while (0); ({ unsigned long __ptr; __asm__ ("" : "=r"(__ptr) : "0"((__typeof_unqual__(*((&(runqueues)))) *)(( unsigned long)((&(runqueues)))))); (typeof((__typeof_unqual__(*((&(runqueues)))) *)(( unsigned long)((&(runqueues)))))) (__ptr + (((__per_cpu_offset[((cpu))])))); }); })));

class_rcu_t __UNIQUE_ID_guard_1486 __attribute__((__cleanup__(class_rcu_destructor))) =

class_rcu_constructor();

if (is_idle_task(...) {

// ...

}

}

Let's look at the runqueue lock:

DEFINE_LOCK_GUARD_1(rq_lock_irqsave, struct rq,

rq_lock_irqsave(_T->lock, &_T->rf),

rq_unlock_irqrestore(_T->lock, &_T->rf),

struct rq_flags rf)

static void sched_balance_update_blocked_averages(int cpu)

{

struct rq *rq = cpu_rq(cpu);

guard(rq_lock_irqsave)(rq);

update_rq_clock(rq);

__sched_balance_update_blocked_averages(rq);

}

static __attribute__((__unused__)) const bool class_rq_lock_irqsave_is_conditional = false;

typedef struct {

struct rq *lock;

struct rq_flags rf;

} class_rq_lock_irqsave_t;

static inline __attribute__((__gnu_inline__)) __attribute__((__unused__))

__attribute__((no_instrument_function)) __attribute__((__always_inline__))

void

class_rq_lock_irqsave_destructor(class_rq_lock_irqsave_t *_T)

{

if (!({

unsigned long _rc = ( unsigned long)(_T->lock);

__builtin_expect(!!((_rc - 1) >= -4095 - 1), 0);

})) {

rq_unlock_irqrestore(_T->lock, &_T->rf);

}

}

static inline __attribute__((__gnu_inline__)) __attribute__((__unused__))

__attribute__((no_instrument_function)) __attribute__((__always_inline__))

void *

class_rq_lock_irqsave_lock_ptr(class_rq_lock_irqsave_t *_T)

{

void *_ptr = (void *)( unsigned long)*(&_T->lock);

if (IS_ERR(_ptr)) {

_ptr = ((void *)0);

}

return _ptr;

}

static inline __attribute__((__gnu_inline__)) __attribute__((__unused__))

__attribute__((no_instrument_function)) __attribute__((__always_inline__))

int

class_rq_lock_irqsave_lock_err(class_rq_lock_irqsave_t *_T)

{

long _rc = ( unsigned long)*(&_T->lock);

if (!_rc) {

_rc = -16;

}

if (!__builtin_expect(!!((unsigned long)(void *)(_rc) >= (unsigned long)-4095), 0)) {

_rc = 0;

}

return _rc;

}

static inline __attribute__((__gnu_inline__)) __attribute__((__unused__))

__attribute__((no_instrument_function)) __attribute__((__always_inline__))

class_rq_lock_irqsave_t

class_rq_lock_irqsave_constructor(struct rq *l)

{

class_rq_lock_irqsave_t _t = { .lock = l }, *_T = &_t;

rq_lock_irqsave(_T->lock, &_T->rf);

return _t;

}

static void sched_balance_update_blocked_averages(int cpu)

{

struct rq *rq = (&(*({ do { const void __seg_gs *__vpp_verify = (typeof((&(runqueues)) + 0))((void *)0); (void)__vpp_verify; } while (0); ({ unsigned long __ptr; __asm__ ("" : "=r"(__ptr) : "0"((__typeof_unqual__(*((&(runqueues)))) *)(( unsigned long)((&(runqueues)))))); (typeof((__typeof_unqual__(*((&(runqueues)))) *)(( unsigned long)((&(runqueues)))))) (__ptr + (((__per_cpu_offset[((cpu))])))); }); })));

class_rq_lock_irqsave_t __UNIQUE_ID_guard_1377

__attribute__((__cleanup__(class_rq_lock_irqsave_destructor))) =

class_rq_lock_irqsave_constructor(rq);

update_rq_clock(rq);

__sched_balance_update_blocked_averages(rq);

}

SCOPES

Scope creation is slightly different for classes and guards, but follow the same principle.

Class

#define __scoped_class(_name, var, _label, args...) \

for (CLASS(_name, var)(args); ; ({ goto _label; })) \

if (0) { \

_label: \

break; \

} else

#define scoped_class(_name, var, args...) \

__scoped_class(_name, var, __UNIQUE_ID(label), args)

That for+if+goto trinity looks a bit unholy at first, but let's look at what the requirements are for a macro that lets us create a new scope:

– create a new scope

– declare the __cleanup variable in that new scope

– make the macro usable either with a single statement, or with curly braces

A for loop gives us the declaration and the scope. However that for loop needs to run once, and it'd be a shame to have to declare a loop counter. The “run exactly once” mechanism is thus encoded in the form of the if+goto.

Consider:

for (CLASS(_name, var)(args); ; ({ goto _label; }))

if (0) {

_label:

break;

} else {

stmt;

}

The execution order will be:

CLASS(_name, var)(args);

stmt;

goto _label;

break;

We thus save ourselves the need for an extra variable at the cost of mild code reader confusion, a common trick used in the kernel.

Guards

For guard scopes, we find the same for+if+goto construct but with some added checks. For regular (unconditional) guards, this is pretty much the same as for CLASS'es:

/*

* Helper macro for scoped_guard().

*

* Note that the "!__is_cond_ptr(_name)" part of the condition ensures that

* compiler would be sure that for the unconditional locks the body of the

* loop (caller-provided code glued to the else clause) could not be skipped.

* It is needed because the other part - "__guard_ptr(_name)(&scope)" - is too

* hard to deduce (even if could be proven true for unconditional locks).

*/

#define __scoped_guard(_name, _label, args...) \

for (CLASS(_name, scope)(args); \

__guard_ptr(_name)(&scope) || !__is_cond_ptr(_name); \

({ goto _label; })) \

if (0) { \

_label: \

break; \

} else

#define scoped_guard(_name, args...) \

__scoped_guard(_name, __UNIQUE_ID(label), args)

For conditional guards, we mainly factor in the fact that the constructor can “fail”. This is relevant for e.g. trylocks where the lock acquisition isn't guaranteed to succeed.

#define __scoped_cond_guard(_name, _fail, _label, args...) \

for (CLASS(_name, scope)(args); true; ({ goto _label; })) \

if (!__guard_ptr(_name)(&scope)) { \

BUILD_BUG_ON(!__is_cond_ptr(_name)); \

_fail; \

_label: \

break; \

} else

#define scoped_cond_guard(_name, _fail, args...) \

__scoped_cond_guard(_name, _fail, __UNIQUE_ID(label), args)

So in the end, that __DEFINE_CLASS_IS_CONDITIONAL() faff is there:

– To help optimize unconditional guard scopes

– To ensure conditional guard scopes are used correctly (i.e. the lock acquisition failure is expected)

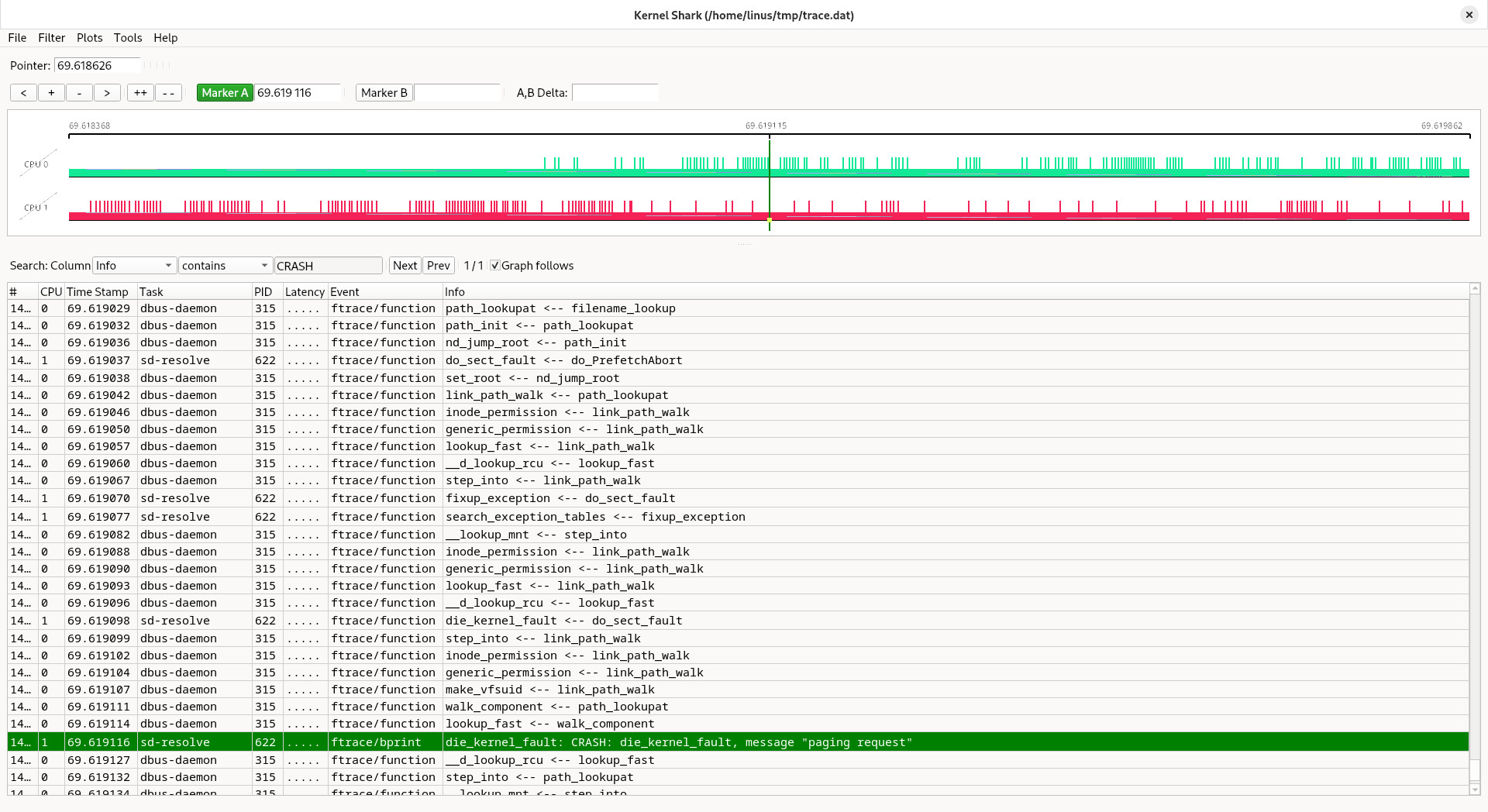

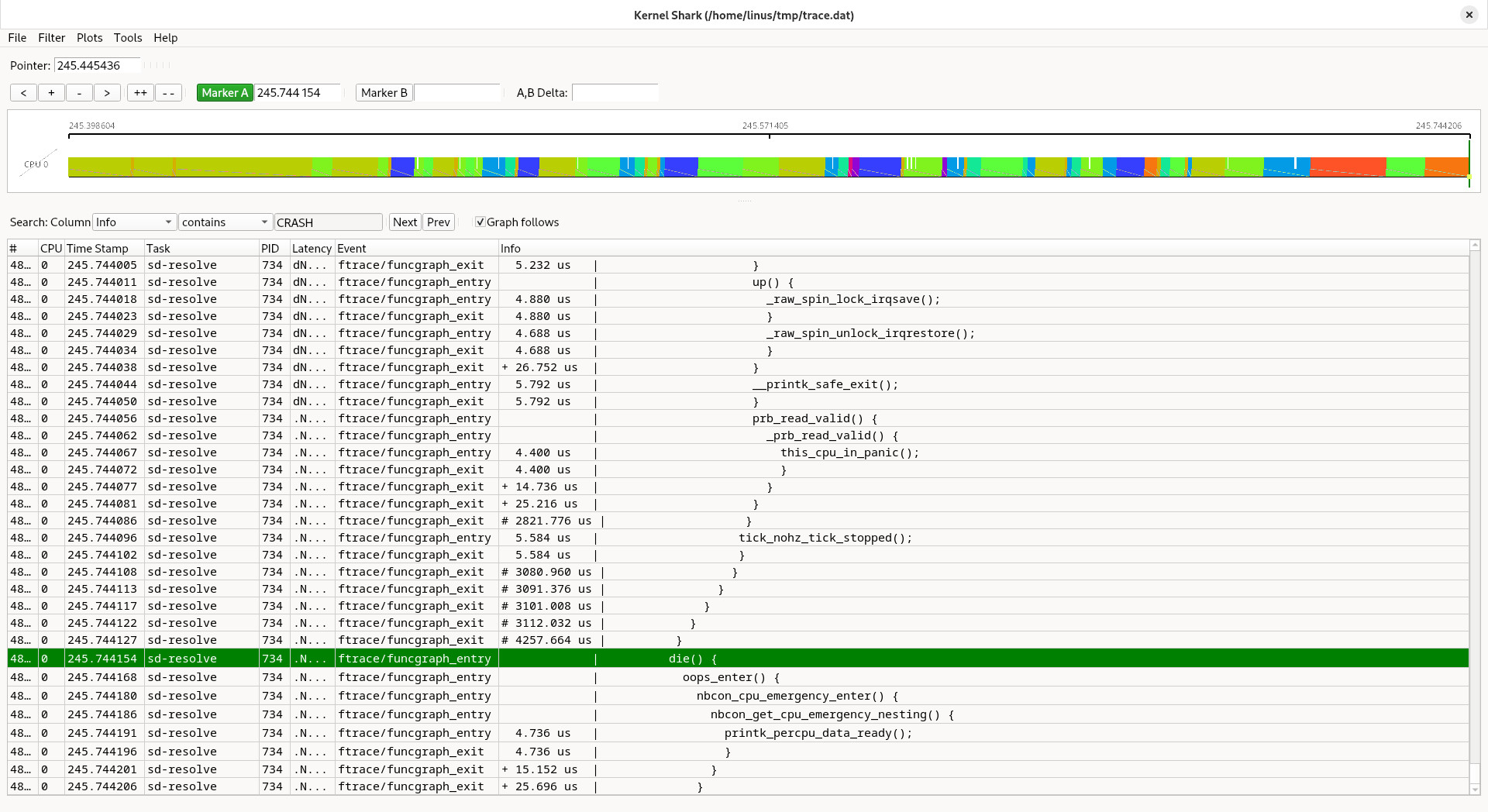

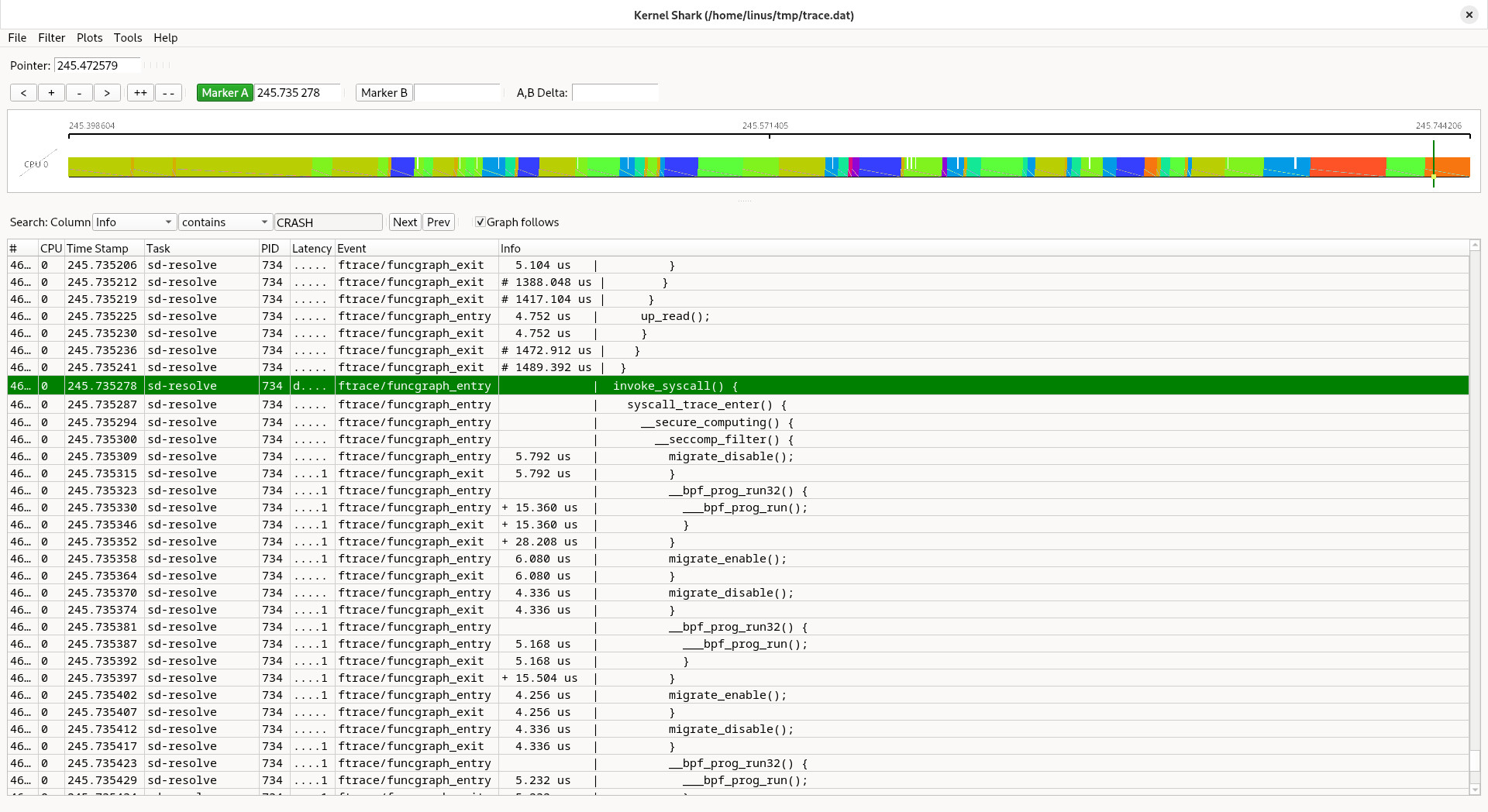

Debuggability

You'll note that while guards delete an entire class of error associated with goto's, they shuffle the code around.

From my experimentation, if you put the constructor and the destructor on a separate line in the CLASS/GUARD definition, you'll at least be able to tell them apart during a splat:

DEFINE_LOCK_GUARD_1(raw_spinlock_irqsave_bug, raw_spinlock_t,

raw_spin_lock_irqsave(_T->lock, _T->flags),

spinlock.h:571: raw_spin_unlock_irqrestore_bug(_T->lock, _T->flags),

unsigned long flags)

int try_to_wake_up(struct task_struct *p, unsigned int state, int wake_flags)

{

...

core.c:4108 scoped_guard (raw_spinlock_irqsave_bug, &p->pi_lock) {

}

...

}

[ 0.216287] kernel BUG at ./include/linux/spinlock.h:571!

[ 0.217115] Oops: invalid opcode: 0000 [#1] SMP PTI

[ 0.217285] Hardware name: QEMU Standard PC (i440FX + PIIX, 1996), BIOS rel-1.16.3-0-ga6ed6b701f0a-prebuilt.qemu.org 04/01/2014

[ 0.217285] RIP: 0010:try_to_wake_up (./include/linux/spinlock.h:569 (discriminator 6) kernel/sched/core.c:4108 (discriminator 6))

[ 0.217285] Call Trace:

[ 0.217285] <TASK>

[ 0.217285] ? __pfx_kthread_worker_fn (kernel/kthread.c:966)

[ 0.217285] __kthread_create_on_node (kernel/kthread.c:535)

[ 0.217285] kthread_create_worker_on_node (kernel/kthread.c:1043 (discriminator 1) kernel/kthread.c:1073 (discriminator 1))

[ 0.217285] ? vprintk_emit (kernel/printk/printk.c:4625 kernel/printk/printk.c:2433)

[ 0.217285] workqueue_init (kernel/workqueue.c:7873 kernel/workqueue.c:7922)

[ 0.217285] kernel_init_freeable (init/main.c:1675)

[ 0.217285] ? __pfx_kernel_init (init/main.c:1570)

[ 0.217285] kernel_init (init/main.c:1580)

[ 0.217285] ret_from_fork (arch/x86/kernel/process.c:164)

[ 0.217285] ? __pfx_kernel_init (init/main.c:1570)

[ 0.217285] ret_from_fork_asm (arch/x86/entry/entry_64.S:259)

[ 0.217285] </TASK>

TL;DR

DEFINE_FREE()

- Sort-of-smart pointer

- Definition tied to the freeing function, e.g.

DEFINE_KFREE(kfree,...)

DEFINE_CLASS()

- Like

DEFINE_FREE() but with factorized initialization.

DEFINE_GUARD()

- Like

DEFINE_CLASS() but you don't need the underlying variable

- e.g. locks don't require declaring a variable, you just lock and unlock them.

DEFINE_LOCK_GUARD()

- Like

DEFINE_GUARD() but when a single pointer isn't sufficient for lock/unlock operations.

- Also for “special” locks with no underlying type such as RCU, preempt or

migrate_disable.