KASan for ARM32 Decompression Stop

This is a retrospect of my work on the KASan Kernel Address Sanitizer for the ARM32 platform. The name is a pun on the diving decompression stop that is something you perform after going down below the surface to avoid decompression sickness.

Where It All Began

The AddressSanitizer (ASan) is a really clever invention by Google, hats off. It is one of those development tools that like git just take on the world in a short time. It was invented by some smart russians, especially Андрей Коновалов (Andrey Konovalov) and Дмитрий Вьюков (Dmitry Vyukov). It appears to be not just funded by Google but also part of a PhD thesis work.

The idea with ASan is to help ensure memory safety by intercepting all memory accesses through compiler instrumentation, and consequently providing “ASan splats” (runtime problem detections) while stressing the code. Code instrumented with ASan gets significantly slower than normal and uses up a bunch of memory for “shadowing” (I will explain this) making it a pure development tool: it is not intended to be enabled on production systems.

The way that ASan instruments code is by linking every load and store into symbols like these:

__asan_load1(unsigned long addr);

__asan_store1(unsigned long addr);

__asan_load2(unsigned long addr);

__asan_store2(unsigned long addr);

__asan_load4(unsigned long addr);

__asan_store4(unsigned long addr);

__asan_load8(unsigned long addr);

__asan_store8(unsigned long addr);

__asan_load16(unsigned long addr);

__asan_store16(unsigned long addr);

As you can guess these calls loads or stores 1, 2, 4, 8 or 16 bytes of memory in a chunk into the virtual address addr and reflects how the compiler thinks that the compiled code (usually C) thinks about these memory accesses. ASan intercepts all reads and writes by placing itself between the executing program and any memory management. The above symbols can be implemented by any runtime environment. The address will reflect what the compiler assumed runtime environment thinks about the (usually virtual) memory where the program will execute.

You will instrument your code with ASan, toss heavy test workloads on the code, and see if something breaks. If something breaks, you go and investigate the breakage to find the problem. The problem will often be one or another instance of buffer overflow, out-of-bounds array or string access, or the use of a dangling pointer such as use-after-free. These problems are a side effect of using the C programming language.

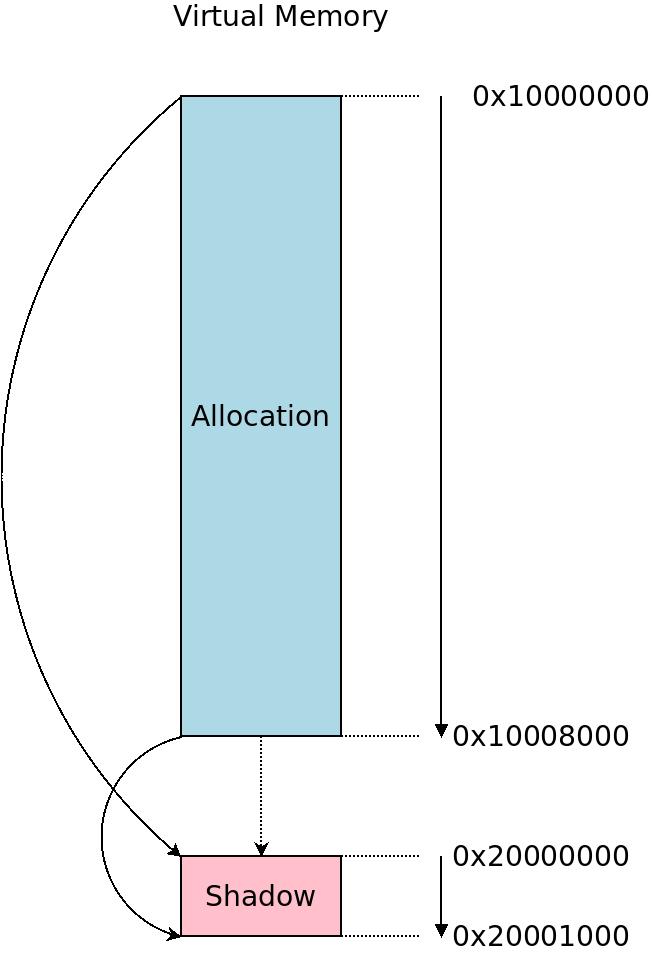

When resolving the mentioned load/store symbols, ASan instrumentation is based on shadow memory, and this is on turn based on the idea that one bit in a single byte “shadows” 8 bytes of memory, so you allocate 1/8 the amount of memory that your instrumented program will use and shadow that to some other memory using an offset calculation like this:

shadow = (address >> 3) + offset

The shadow memory is located at offset, and if our instrumented memory is N bytes then we need to allocate N/8 = N >> 3 bytes to be used as shadow memory. Notice that I say instrumented memory not code: ASan shadows not only the actual compiled code but mainly and most importantly any allocations and referenced pointers the code maintains. Also the DATA (contants) and BSS (global variables) part of the executable image are shadowed. To achive this the userspace links to a special malloc() implementation that overrides the default and manages all of this behind the scenes. One aspect of it is that malloc() will of course return chunks of memory naturally aligned to 8, so that the shadow memory will be on an even byte boundary.

The ASan shadow memory shadows the memory you're interested in inspecting for memory safety.

The ASan shadow memory shadows the memory you're interested in inspecting for memory safety.

The helper library will allocate shadow memory as part of the runtime, and use it to shadow the code, data and runtime allocations of the program. Some will be allocated up front as the program is started, some will be allocated to shadow allocations at runtime, such as dynamically allocated buffers or anything else you malloc().

The error detection was based on the observation that a shadowing byte with each bit representing an out-of-bounds access error can have a “no error” state (0x00) and 8 error states, in total 9 states. Later on a more elaborate scheme was adopted. Values 1..7 indicate how many of the bytes are valid for access (if you malloc() just 5 bytes then it will be 5) and then there are magic bytes for different conditions.

When a piece of memory is legally allocated and accessed the corresponding bits are zeroed. Uninitialized memory is “poisoned”, i.e. set to a completely illegal value != 0. Further SLAB allocations are padded with “red zones” poisoning memory in front and behind of every legal allocation. When accessing a byte in memory, it is easy to verify that the access is legal: is the shadow byte == 0? That means all 8 bytes can be freely accessed and we can quickly proceed. Else we need a closer look. Values 1 thru 7 means bytes 1 thru 7 are valid for access (partly addressable) so we check that and any other values means uh oh.

- 0xFA and 0xFB means we have hit a heap left/right redzone so an out-of-bounds access has happened

- 0xFD means access to a free:ed heap region, so use-after-free

- etc

Decoding the hex values gives a clear insight into what access violation we should be looking for.

To be fair the one bit per byte (8-to-1) mapping is not compulsory. This should be pretty obvious. Other schemes such as mapping even 32 bytes to one byte have been discussed for memory-constrained systems.

All memory access calls (such as any instance of dereferencing a pointer) and all functions in the library such as all string functions are patched to check for these conditions. It's easy when you have compiler instrumentation. We check it all. It is not very fast but it's bareable for testing.

Researchers in one camp argue that we should all be writing software (including operating systems) in the programming language Rust in order to avoid the problems ASan is trying to solve altogether. This is a good point, but rewriting large existing software such as the Linux kernel in Rust is not seen as realistic. Thus we paper over the problem instead of using the silver bullet. Hybrid approaches to using Rust in kernel development are being discussed but so far not much has materialized.

KASan Arrives

The AddressSanitizer (ASan) was written with userspace in mind, and the userspace project is very much alive.

As the mechanism used by ASan was quite simple, and the compilers were already patched to handle shadow memory, the Kernel Address Sanitizer (KASan) was a natural step. At this point (2020) the original authors seem to spend a lot of time with the kernel, so the kernel hardening project has likely outgrown the userspace counterpart.

The magic values assigned to shadow memory used by KASan is different:

- 0xFA means the memory has been free:ed so accessing it means use-after-free.

- 0xFB is a free:ed managed resources (

devm_*accessors) in the Linux kernel. - 0xFC and 0xFE means we access a

kmalloc()redzone indicating an out-of-bounds access.

This is why these values often occur in KASan splats. The full list of specials (not very many) can be found in mm/kasan/kasan.h.

The crucial piece to create KASan was a compiler flag to indicate where to shadow the kernel memory: when the kernel Image is linked, addresses are resolved to absolute virtual memory locations, and naturally all of these, plus the area where kernel allocates memory (SLABs) at runtime need to be shadowed. As can be seen in the kernel Makefile.kasan include, this boils down to passing the flags -fsanitize=kernel-address and -asan-mapping-offset=$(KASAN_SHADOW_OFFSET) when linking the kernel.

The kernel already had some related tools, notably kmemcheck which can detect some cases of use-after-free and references to uninitialized memory. It was based on a slower mechanism so KASan has since effectively superceded it, as kmemcheck was removed.

KASan was added to the kernel in a commit dated february 2015 along with support for the x86_64 architecture.

To exercise the kernel to find interesting bugs, the inventors were often using syzkaller, a tool similar to the older Trinity: it bombs the kernel with fuzzy system calls to try to provoke undefined and undesired behaviours yielding KASan splats and revealing vulnerabilities.

Since the kernel is the kernel we need to explicitly assign memory for shadowing. Since we are the kernel we need to do some manouvers that userspace can not do or do not need to do:

- During early initialization of the kernel we point all shadow memory to a single page of just zeroes making all accesses seem fine until we have proper memory management set up. Userspace programs do not need this phase as “someone else” (the C standard library) handles all memory set up for them.

- Memory areas which are just big chunks of code and data can all point to a single physical page with poison. In the virtual memory it might look like kilobytes and megabytes of poison bytes but it all points to the same physical page of 4KB.

- We selectively de-instrument code as well: code like KASan itself, the memory manager per se, or the code that patches the kernel for ftrace, or the code that unwinds the stack pointer for a kernel splat clearly cannot be instrumented with KASan: it is part of the design of these facilities to poke around at random locations in memory, it's not a bug. Since KASan was added all of these sites in the generic kernel code have been de-instrumented, more or less.

Once these generic kernel instrumentations were in place, other architectures could be amended with KASan support, with ARM64 following x86 soon in the autumn of 2015.

Some per-architecture code, usually found in arch/xxxx/mm/kasan_init.c is needed for KASan. What this code does is to initalize the shadow memory during early initialization of the virtual memory to point to a “zero page” and later on to populate all the shadow memory with poisoned shadow pages.

The shadow memory is special and needs to be populated accessing the very lowest layer of the virtual memory abstraction: we manipulate the page tables from the top to bottom: pgd, p4d, pud, pmd, pte to make sure that the $(KASAN_SHADOW_OFFSET) points to memory that has valid page table entries.

We need to use the kernel memblock early memory management to set up memory to hold the page tables themselves in some cases. The memblock memory manager also provide us with a list of all the kernel RAM: we loop over it using for_each_mem_range() and populate the shadow memory for each range. As mentioned we first point all shadows to a zero page, and later on to proper KASan shadow memory, and then KASan kicks into action.

A special case happens when moving from using the “zero page” KASan memory to proper shadow memory: we would risk running kernel threads into partially initialized shadow memory and pull the ground out under ourselves. Not good. Therefore the global page table for the entire kernel (the one that has all shadow memory pointing to a zero page) is copied and used during this phase. It is then replaced, finally, with the proper KASan-instrumented page table with pointers to the shadow memory in a single atomic operation.

Further all optimized memory manipulation functions from the standard library need to be patched as these often have assembly-optimized versions in the architecture. This concerns memcpy(), memmove() and memset() especially. While the quick optimized versions are nice for production systems, we replace these with open-coded variants that do proper memory accesses in C and therefore will be intercepted by KASan.

All architectures follow this pattern, more or less. ARM64 supports hardware tags, which essentially means that the architecture supports hardware acceleration of KASan. As this is pretty fast, there is a discussion about using KASan even on production systems to capture problems seen very seldom.

KASan on ARM32

Then there was the attempt to provide KASan for ARM32.

The very first posting of KASan in 2014 was actually targeting x86 and ARM32 and was already working-kind-of-prototype-ish on ARM32. This did not proceed. The main reason was that when using modules, these are loaded into a designated virtual memory area rather than the kernel “vmalloc area” which is the main area used for memory allocations and what most architectures use. So when trying to use loadable modules the code would crash as this RAM was not shadowed.

The developers tried to create the option to move modules into the vmalloc area and enable this by default when using KASan to work around this limitation.

The special module area is however used for special reasons. Since it was placed in close proximity to the main kernel TEXT segment, the code could be accessed using short jumps rather than long jumps: no need to load the whole 32-bit program counter anew whenever a piece of code loaded from a module was accessed. It made code in modules as quick as normal compiled-in kernel code +/– cache effects. This provided serious performance benefits.

As a result KASan support for ARM was dropped from the initial KASan proposal and the scope was limited to x86, then followed by ARM64. “We will look into this later”.

In the spring of 2015 I started looking into KASan and was testing the patches on ARM64 for Linaro. In june I tried to get KASan working on ARM32. Andrey Ryabinin pointed out that he actually had KASan running on ARM32. After some iterations we got it working on some ARM32 platforms and I was successfully stressing it a bit using the Trinity syscall fuzzer. This version solved the problem of shadowing the loadable modules by simply shadowing all that memory as well.

The central problem with running KASan on a 32-bit platform as opposed to a 64-bit platform was that the simplest approach used up 1/8 of the whole address space which was not a problem for 64-bit platforms that have ample virtual address space available. (Notice that the amount of physical memory doesn't really matter, as KASan will use the trick to just point large chunks of virtual memory to a single physical poison page.) On 32-bit platforms this approach ate our limited address space for lunch.

We were setting aside several static assigned allocations in the virtual address space, so we needed to make sure that we only shadow the addresses actually used by the kernel. We would not need to shadow the addresses used by userspace and the shadow memory virtual range requirement could thus be shrunk from 512 MB to 130 MB for the traditional 3/1 GB kernel/userspace virtual address split used on ARM32. (To understand this split, read my article How the ARM32 Kernel Starts which tries to tell the story.)

Sleeping Beauty

This more fine-grained approach to assigning shadow memory would create some devil-in-the-details bugs that will not come out if you shadow the whole virtual address space, as the 64-bit platforms do.

A random access to some memory that should not be poked (and thus lacking shadow memory) will lead to a crash. While QEMU and some hardware was certainly working, there were some real hardware platforms that just would not boot. Things were getting tedious.

KASan for ARM32 development ground to a halt because we were unable to hash out the bugs. The initial patches from Andrey started trading hands and these out-of-tree patches were used by some vendors to test code on some hardware.

Personally, I had other assignments and could not take over and develop KASan at this point. I'm not saying that I was necessarily a good candidate at the time either, I was just testing and tinkering with KASan because ARM32 vendors had a generic interest in the technology.

As a result, KASan for ARM32 was pending out-of-tree for almost 5 years. In 2017 Abbot Liu was working on it and fixed up the support for LPAE (large physical address extension) and in 2019 Florian Fainelli picked up where Abbot left off.

Some things were getting fixed along the road, but what was needed was some focused attention and these people had other things on their plate as well.

Finally Fixing the Bugs

In April 2020 I decided to pick up the patches and have a go at it. I sloppily named my first iteration “v2” while it was something like v7.

I quickly got support from two key people: Florian Fainelli and Ard Biesheuvel. Florian had some systems with the same odd behaviour of just not working as my pet Qualcomm APQ8060 DragonBoard that I had been using all along for testing. Ard was using the patches for developing and debugging things like EFI and KASLR.

During successive iterations we managed to find and patch the remaining bugs, one by one:

- A hard-coded bitmask assuming thread size order to be 1 (4096 bytes) on ARMv4 and ARMv5 silicon made the kernel crash when entering userspace. KASan increases the thread order so that there would be space for redzones before and after allocations, so it needed more space. After reading assembly one line at the time I finally figured this out and patched it.

- The code was switching MMU table by simply altering the TTBR0 register. This worked in some machines, especially ARMv7 silicon, but the right way to do it was to use the per-CPU macro

cpu_switch_mm()which looks intuitive but is an ARM32-ism which is why the original KASan authors didn't know about it. This macro accounts for tiny differences between different ARM cores, some even custom to certain vendors. - Something fishy was going on with the attached device tree. It turns out, after much debugging, that the attached devicetree could end up in memory that was outside of the kernel 1:1 physical-to-virtual mapping. The page table entries that would have translated the physical memory area where the device tree was stored was wiped clean yielding a page fault. The problem was not caused by KASan per se: it was a result of the kernel getting over a certain size, and all the instrumentation added to the kernel makes it bigger to the point that it revealed the bug. We were en route to fix a bug related to big compressed kernel images. I developed debugging code specifically to find this bug and then made a patch for this making sure not to wipe that part of the mapping. (This post gives a detailed explanation of the problem.) Ard quickly came up with a better fix: let's move the device tree to determined place in the fixed mappings and handle it as if it was a ROM.

These were the major roadblocks. Fixing these bugs created new bugs which we also fixed. Ard and Florian mopped up the fallout.

In the middle of development, five level page tables were introduced and Mike Rapoport made some generic improvements and cleanup to the core memory management code, so I had to account for these changes as well, effectively rewriting the KASan ARM32 shadow memory initialization code. At one point I also broke the LPAE support and had to repair it.

Eventually the v16 patch set was finalized in october 2020 and submitted to Russell Kings patch tracker and he merged it for Linux v5.11-rc1.

Retrospect

After the fact three things came out nice in the design of KASan for ARM32:

- We do not shadow or intercept highmem allocations, which is nice because we want to get rid of highmem altogether.

- We do not shadow the userspace memory, which is nice because we want to move userspace to its own address space altogether.

- Personally I finally got a detailed idea of how the ARM32 kernel decompresses and starts, and the abstract concepts of highmem, lowmem, and the rest of those wild animals. I have written three different articles on this blog as a result, with ideas for even more of them. By explaining how things work to others I realize what I can't explain and as a result I go and research it.

Andrey and Dmitry has since worked on not just ASan and KASan but also on what was intially called the KernelThreadSanitizer (KTSAN) but which was eventually merged under the name KernelConcurrencySanitizer (KCSAN). The idea is again to use shadow memory, but now for concurrency debugging at runtime. I do not know more than this.